Over the weekend, I checked out the Freakanomics documentary on Netflix. If you haven’t read the best-selling book or its sequel Super Freakanomics, you really should. It’s a fantastic look at “the hidden side of everything,” where economist Stephen Levitt explains everything from baby name choices to why drug dealers live at home with their mothers, all based on hard numbers.

The film version explores several of the book’s chapters, with one of the more intriguing focusing on Levitt proving corruption within Sumo wrestling. By looking at a large volume of match results, he was able to show that participants were engaging in a sort of you-scratch-my-back-I’ll-scratch-yours system that ensured wrestlers’ livelihoods. And here we thought only WWE matches were fixed.

I decided to apply similar methodology to another area that has long been accused of corruption: video game reviews. Indeed, Metacritic co-founder Marc Doyle revealed last year that he had removed some unnamed publications from the site’s reviews listing because of impropriety. “There’s corruption – people can be bought, absolutely,” he told CVG.

In that vein, I compared Metacritic video game review scores with movie review scores to see what sorts of differences might present themselves. I’ve written before about the discrepencies between the two media as they pertain to the aggregation website – the movie ratings it compiles tend to be from critics employed by mainstream newspapers while almost all game reviews come from dedicated gaming sites.

It’s no secret that these trade publications score their respective products higher than mainstream critics do, which, I’ve argued, is part of the larger problem when it comes to games journalism. As long as trade publications keep pumping up game ratings, publishers have little incentive to deal equitably with the mainstream media, where critics are more likely to be harsher. Dan Hsu, former editor of Electronic Gaming Monthly, explained a lot of the shadiness that goes on at some of these publications a few years ago in a series of blog posts.

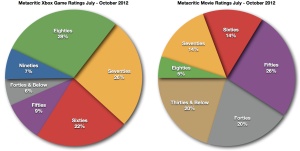

So how bad is the discrepancy between movies and games? Very, as the chart below illustrates (click to enlarge). To compile the graphic, I amassed every wide-release movie review between July and this past weekend (35 in total) and compared against every game released for the Xbox 360 over the same time period (51 in total). The results are interesting to say the least.

The average movie score was 53 out of 100 while the average for games was 71. Far more intriguing, however, is the proportion of games that got high marks. More than 60% of games scored upward of the seventieth percentile, while only 19% of movies rated the same. Seven per cent of games scored higher than 90 out of 100, a feat that not even a single film could accomplish. At the other end, only 15% of games scored in the fiftieth percentile or below, compared to two-thirds of movies.

The results suggest several possibilities, the unlikeliest of which is that game publishers are producing very few stinkers and significantly better content relative to Hollywood. Another is that game reviewers are simply more lenient in their reviews – it’s a well-known truism that most games are actually scored on a scale of seven to 10, with anything below a likely kiss of death. Another is that game reviewers are more subject to group think, while film critics are more varied in their interpretations. Still another possibility is that Metacritic is not aggregating proper sources for reviews; movie ratings could be lower, for example, if more trade publications were counted instead of mainstream critics.

Some of the discrepancy can probably also be explained by different rating scales. Most publications score movies on a four- or five-point scale while games are typically rated out of 10 or 100. Even if some of this is factored in, the difference between movie and game ratings is still very big.

The discrepancy doesn’t prove corruption – a much larger Freakanomics-like sampling would be needed to even come close to such a conclusion – but it does suggest problems with how games are being reviewed or, more specifically, how they’re being rated. The law of averages states that the majority of any sort of product is going to be middling, with relatively few exceptions either excelling or failing. Movies definitely follow that pattern with 60% falling between the fortieth and sixtieth percentiles. With games, only about 10% count in that middle area. That just doesn’t seem right, does it?

BT

October 9, 2012 at 11:52 am

It’s been a pet peeve of mine for quite awhile that (most) game review sites don’t use the whole scale.

El Presidente (@elquintron)

October 9, 2012 at 1:12 pm

It might be worth mentionning that games are reviewed on several levels: Gameplay/Execution, Story/writing and Graphical appeal to name a few, so games can score well on a few without necessarily being “fun” for everyone.

That being said though I’ve seen the Sumo part of the Freakonomics documentary, and I’m sure some backscratching is going on too.

craigbamford

October 12, 2012 at 5:03 pm

It’s something that a lot of game criticism/reviewing discussions revolve around. It’s somewhat complicated, especially because (unlike movies or books) games really are a medium that tend to improve over time. It’s not surprising, since electronic games are still so technology-limited and since it’s a very young (and dauntingly difficult-to-master) expressive form. It does mean, though, that you’re probably going to have some kind of grade inflation unless reviewers consciously raise their standards over time.

That’s going to be a bit controversial. If you give a 80 to a game that’s demonstrably better than an old standard that got a 90 ten years ago, is that “fair”? Some people might think so, but the designers won’t agree—especially since their bonuses can ride on Metacritic scores—and a lot of the fans won’t agree either.

That “number vs. star” thing plays a gigantic role, too, one that I think you underestimated. A score out of 100 is going to inevitably be anchored at 100% in a way that a starred review simply isn’t. People assume that the reviewers start at 100% and then subtract points for any problems with the game. That isn’t necessarily how it works, but if that’s how people THINK it works, reviewers will catch a lot of hell from fans, developers, and publishers alike.

Sure, reviewers could stick to their guns, but for how long before they’re replaced by some bright (and cheap) starry-eyed former fan who DOES see things that way? How long before the exclusive preview content moves somewhere that WILL play ball? It’s not even corruption, necessarily: the publisher might honestly believe that that’s how 1-100 scoring is supposed to work!

(Especially if people assume it’s like school, where a 70% grade is of middling quality and a 50% is a dismal failure. Which they would, since that’s the sort of grading system they grew up with!)

A starred review doesn’t really have those problems. People just don’t anchor on “five stars” the way they anchor on “100%”. They get that 3 stars is fine, four stars is great, and five stars is excellent, without thinking of it as a percentage. So movie reviewers have more latitude, are less likely to get embroiled in controversy, and are more likely to actually have their reviews read.

That’s why a lot of good gaming outlets don’t even bother giving scores anymore. Sure, you aren’t on Metacritic. The tradeoff might well be worth it.